Tenstorrent and the State of AI Hardware Startups

Semi-custom silicon is a bigger problem than Nvidia.

Irrational Analysis is heavily invested in the semiconductor industry.

Please check the ‘about’ page for a list of active positions.

Positions will change over time and are regularly updated.

Opinions are authors own and do not represent past, present, and/or future employers.

All content published on this newsletter is based on public information and independent research conducted since 2011.

This newsletter is not financial advice, and readers should always do their own research before investing in any security.

Feel free to contact me via email at: irrational_analysis@proton.me

I wrote some negative things after seeing Tenstorrent’s Hot Chips 2024 presentation.

A lot of Tenstorrent employees subscribed because of this post.

Surprisingly, David Bennet (Chief Customer Officer) invited me to meet senior software, architecture, and customer relations leadership for an open discussion.

The meeting lasted 1.5 hours and was a ton of fun.

Before going over the actual content of this post, I would like to discuss the topic of bias.

Am I biased? Yes! This is why I regularly post all > 1% positions with average price.

The Tenstorrent people are very nice, and it was a lot of fun talking to them. This writeup is largely positive because Tenstorrent did a very good job of answering my questions with honest, technical answers. It’s up to you to decide for yourself if what I write is trustworthy.

I hold no economic interest in Tenstorrent.

Tenstorrent did not pay me any money or provide gifts of significant value.

I ate one cookie and drank a bottle of water.

Got a (branded) swag bag containing a hat, thermos, and cable carrying/travel bag.

No Tenstorrent staff have reviewed or edited this post. They will read it at the same time as everyone else.

Made a framework for thinking about AI hardware startups at the end so this post has value to people who are not interested in Tenstorrent.

Contents:

Tenstorrent Technical Overview

Discussion Summary

Register Spilling and v_

Baby RISC-V Fragmentation

Baby RISC-V Capabilities

History of the Software Stacks

Latency

Davor Capalija Whiteboard Diagram

Tenstorrent Opinion and Valuation Framework

Broader AI Hardware Startup Framework

[1] Tenstorrent Technical Overview

Blackhole (gen 2) has detailed information and will be the focus of discussion.

Main takeaways for Grendel (gen 3):

Move to chiplet architecture.

Separate high-performance RISC-V CPU cores and AI cores into separate chiplets.

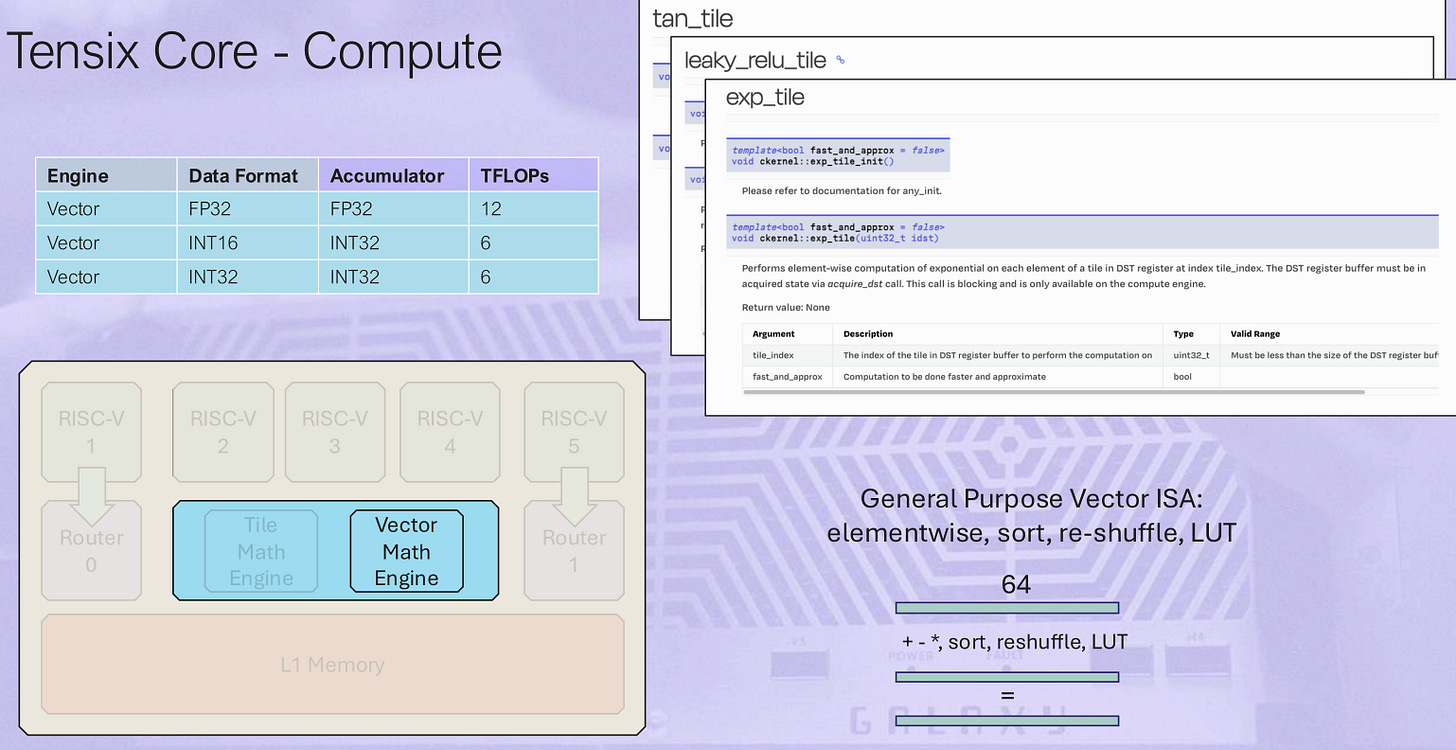

Tenstorrent architecture is a mesh topology with a variety of blocks.

The 16 big RISC-V CPU cores are for general purpose code. You can run Linux on them.

Baby RISC-V cores are super tiny embedded CPU cores. The 725 baby RISC-V cores take up less than 1% of die area.

The point of these baby RISC-V cores is to launch kernels. They are basically the control logic.

Tensix cores are the AI compute building blocks. Each has five baby RISC-V to handle kernel launches. “Compute” is vector and matrix math engines.

Note that two of these baby RISC-V cores are dedicated to router nodes. Data-movement model is important for understanding a lot of the decisions made in this architecture.

The compiler for these baby RSIC-V cores is a lightly modified GCC. Users do not need to plan kernel splitting for the three compute-assigned baby RISC-V as the compiler automatically does this. In other works, you only need to write one compute kernel, in theory.

Baby RISC-V in DRAM and Ethernet “cores” are just router nodes.

[2] Discussion Summary

I have tried to organize the 90 minutes of discussion into something readable. Only have my illegible notes, mediocre memory, and a few pictures of whiteboard drawings to work with.

Converting a free-form conversation amongst ~10 people into a coherent written summary is surprisingly difficult. If you have feedback, please share.

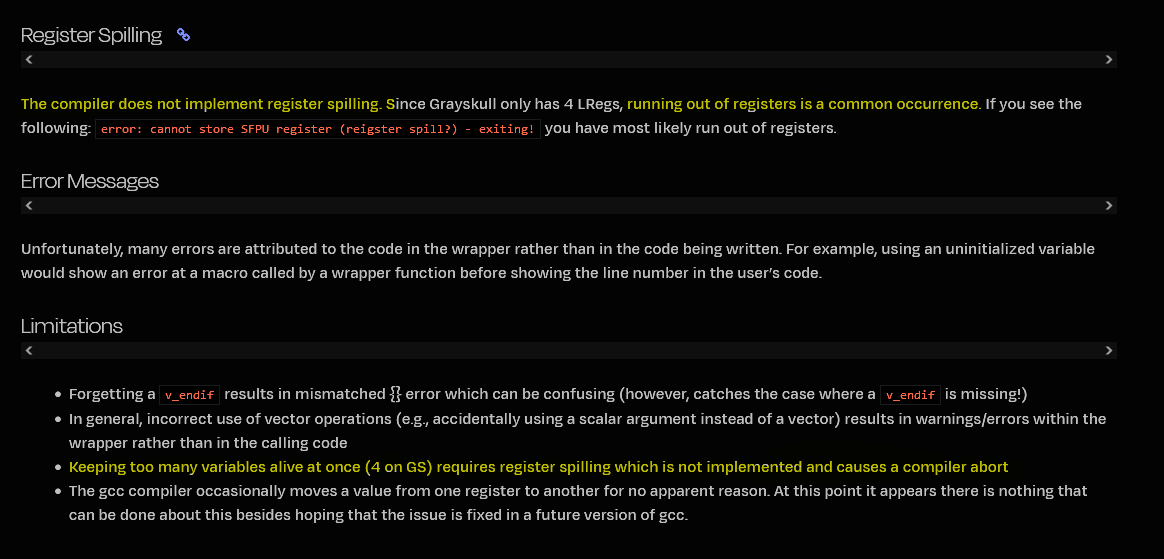

[2.a] Register Spilling and v_

To prepare, I browsed the Tenstorrent documentation to see if anything jumped out.

Initially, I thought that register spilling was a super important feature. Tenstorrent responded that the baby RISC-V cores are so small, it does not make sense to have register spilling given the intended programming model.

Cores are either dedicated to data movement (router node control) or the compute units. In the case of compute, three baby RISC-V are assigned as a group. The higher-level compiler calls GCC 5 (five) times for each Tensix core.

So the user compute kernel is already spitted before three instances of GCC get called.

Given the intended programming model, this seems fine. Still looks like a challenge for kernel writers but nothing unsurmountable.

These “v_” things showed up a lot and one line jumped out. It almost looked like this was some kind of sparsity support?

Turns out, this is the Tenstorrent RSIC-V way of implementing masking features from AVX-512 (x86) and SVE (aarch64).

Masking features help with power saving.

[2.b] Baby RISC-V Fragmentation

At Hot Chips 2024, there was a moment in Q&A that I mis-interpreted.

Incorrectly thought that the baby RISC-V cores have different ISAs.

Tenstorrent clarified that this is not correct. All 752 baby RSIC-V cores have the same ISA. The ones assigned to NOC (data movement) have structural optimizations for that workload (physical design…?) but no ISA difference.

One of my major concerns was RSIC-V ISA fragmentation and they clarified that this is not a problem. The same modified GCC gets called for everything.

[2.c] Baby RISC-V Capabilities

One of the programming examples on GitHub caught my interest.

I was curious on the compute capabilities of these baby RISC-V cores. Can users weave in some branchy logic or niche calculations in? Is anyone using this capability?

They were honest in answering this question. Yes, it is possible to have complex control flow in the data movement baby RISC-V assigned cores, but it is likely that users will encounter a bottleneck. These tiny cores are meant for kernel launches.

Given the open-source nature of everything Tenstorrent, I still have hope some cracked programmer figures out how to make something cool happen along these lines.

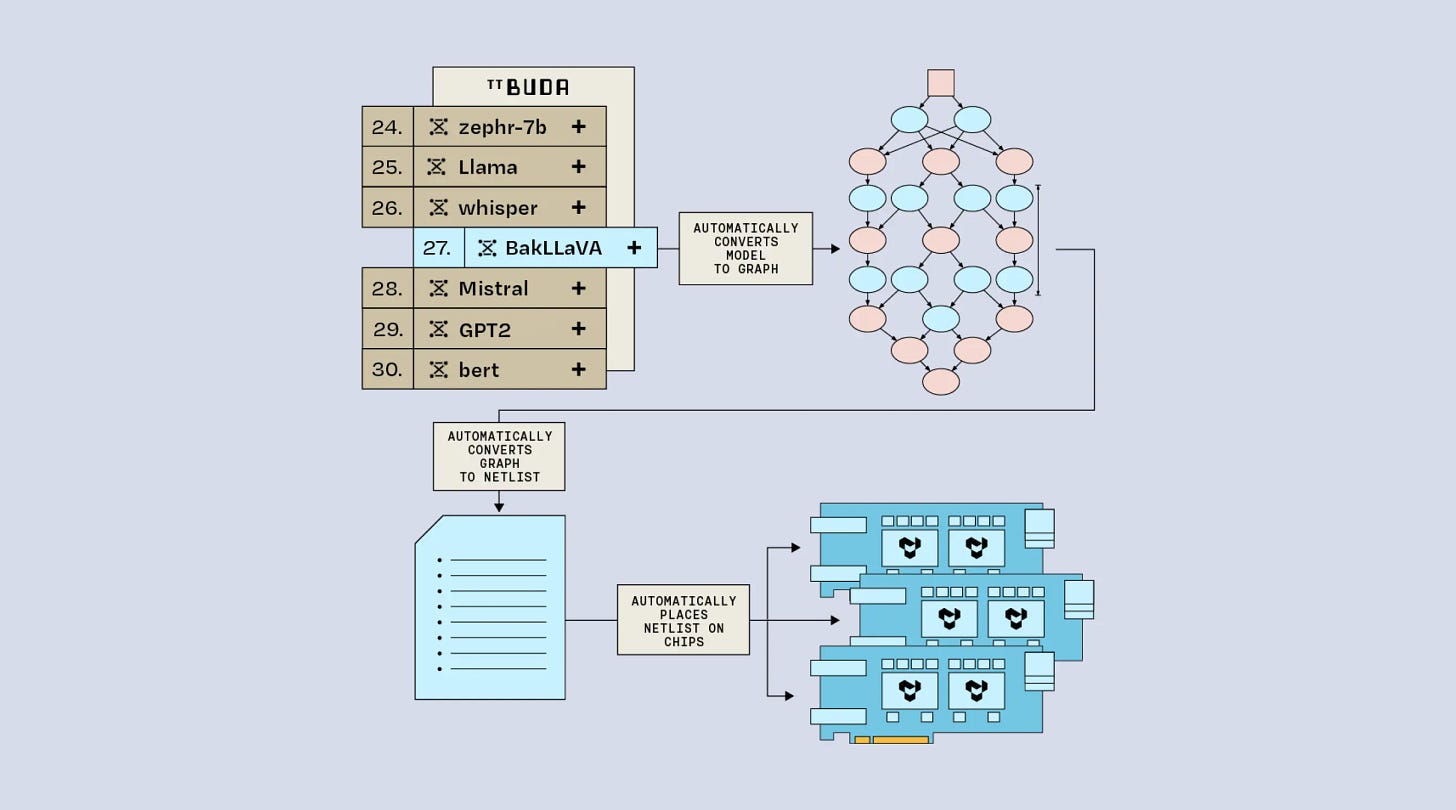

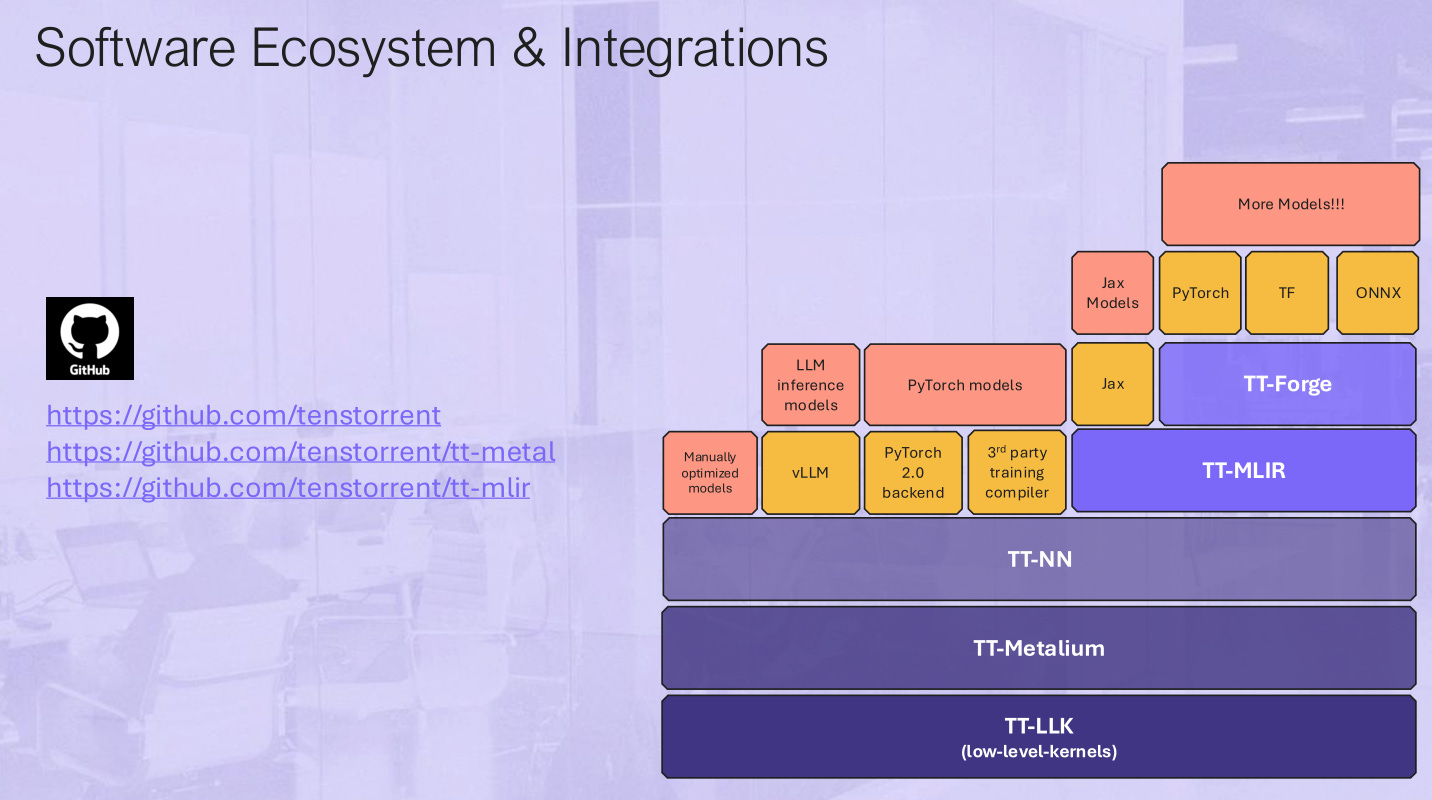

[2.d] History of the Software Stacks

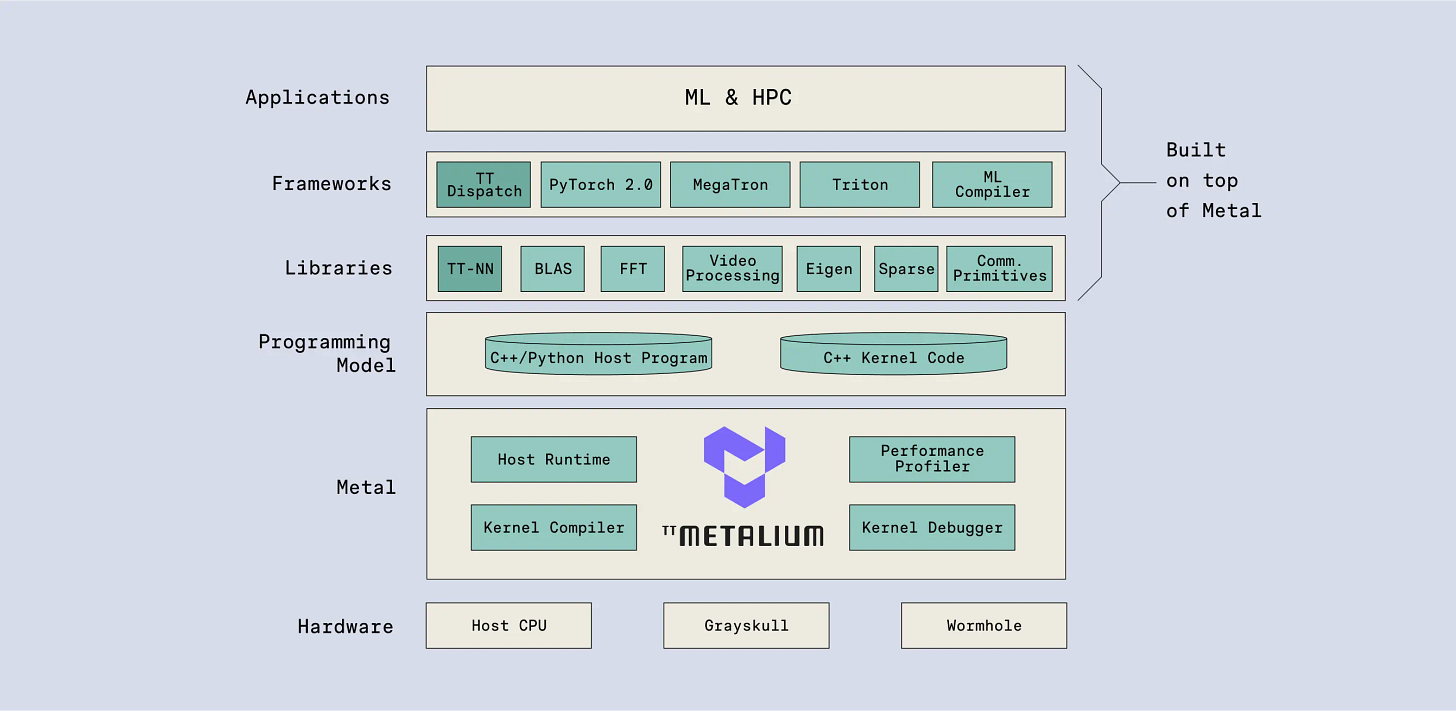

There are a wide variety of software stacks supported by Tenstorrent.

The current software stack is attempt #6. A lot of good stuff but was curious what happened to attempts 1 thru 5.

Apparently, the old way was re-build the software stack with each new hardware revision and target workloads.

They literally had the RTL engineers write software kernels… lol.

But the new method is to build unified software from the ground up and down. Let people come in from the top (ML model —> graph —> netlist) via Buda or from the bottom (low-level kernels), or somewhere in-between (Metalium, TT-NN, TT-MLIR).

Entry points for everyone. Maximum open-source and transparency. Easy access to developer kits/hardware.

These days, some of the best kernels for Tenstorrent hardware are written by new grads and hobbyists on the official Discord.

[2.e] Latency

One major concern I have (and still have to an extent) is latency effecting the mesh topology of Tenstorrent scale-out. Latency kills inference performance.

The first argument provided by Tenstorrent is their scale-out is much lighter than standard RDMA over Converged Ethernet (RoCE).

Apparently, they stripped a lot of features out. No TCP/IP.

Other strategies they have been working on:

Overlapping of data prefetch.

Enabled by pipelining.

Runs on DRAM baby RSIC-V cores.

Z-shortcut very important for latency optimization.

Tenstorrent showed me some internal-only slides on their recent latency reduction progress including performance progression of Llama 3.1 70B inference. Non-public slides and the ~10 minutes of conversation related to this topic were compelling.

(still a huge amount of work to be done to be clear)

They claim the slides will eventually be published as a case-study on their GitHub.

¯\_(ツ)_/¯

[2.F] Mixed Intelligence

Jim Keller (CEO of Tenstorrent) has previously worked on Tesla FSD. In multiple public talks, he has brought up an interesting line of thinking.

The anecdote is simple. Jim Keller says he taught his daughters to drive with two hours of practice. He did not need to feed his daughters exabytes of video data and spend months having them pour over all that video.

Tenstorrent has an implicate view that the future of AI is mixed-workload, not pure linear algebra spam. Yes, MATMUL go BRRRRRR is valuable, but CPU workloads will be needed in the future. That is the hope.

So far, this has not played out. Nvidia Grace is an overpriced memory controller. AMD had mixed CPU and GPU chiplets in MI300A (almost no sales outside of two traditional HPC supercomputers) but is going all GPU chiplets in future generations. The vast majority of AMD datacenter GPU volume is the pure-GPU MI300X.

What’s interesting is that Tenstorrent is the last standing company that is working on AI and has a good CPU microarchitecture team. If future AI workloads need CPU integration, they are the only well positioned vendor.

I brought this up and asked Tenstorrent if they have customers moving towards or interested in a more mixed AI workload and they said not yet.

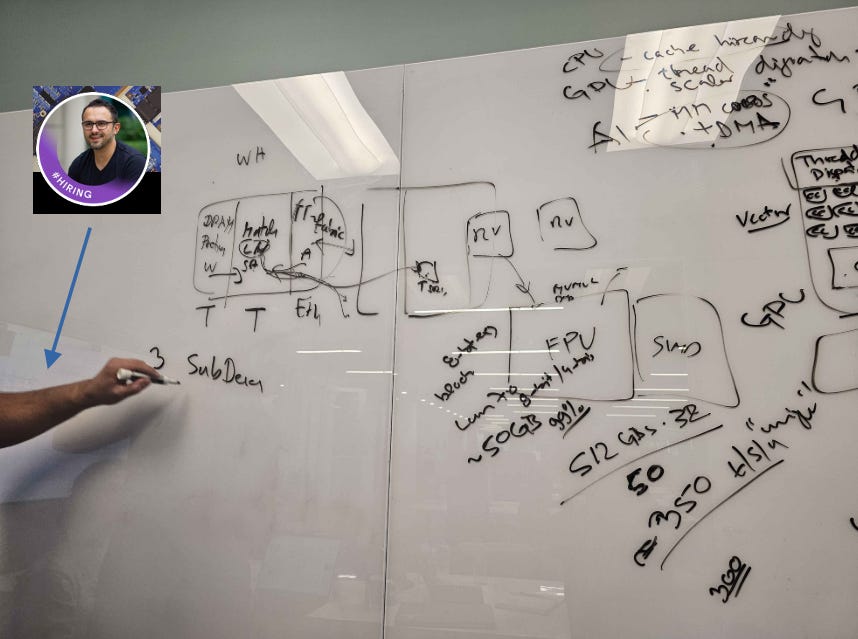

[3] Davor Capalija Whiteboard Diagram

Davor (Senior Software+Architecture Fellow) spent the majority of the meeting drawing stuff on a whiteboard and explaining the Tenstorrent architecture in great detail. He even went beyond and made direct comparisons to Nvidia/GPU (rip AMD), Google TPU, Groq, SambaNova, and Cerebras.

I found his framework so compelling that all this material needed to be broken out into a dedicated section.

Took pictures of the whiteboard (with permission) and re-created everything in Visio.

His arguments are very good. Going to tell you all up-front that I agree with 80% of what he said/drew.

A paraphrased summary of Davor’s arguments will be written in italics. I will add my comments (including disagreements) afterward in regular (non-italicized) font.

CPU workloads are designed around cache hierarchy.

GPUs are originally built for graphics and designed for thread-based scaling. Each CUDA core is really an ALU. The streaming multiprocessor (SM) is the real GPU “core”. GPUs have fewer cache levels (only L1 and L2) compared to CPUs.

AI workloads need many cores and a DMA engine. Including L2 does not make sense.

Tenstorrent architecture is essentially a GPU, but better.

Cores are correct size in terms of compute and SRAM (2MB).

No L2 cache.

Using baby RISC-V CPU cores for kernel launching, data movement, and scheduling.

All the flexibility of a CPU (it is literally a CPU with full ISA).

No legacy structures to support. (graphics)

TT architecture built around DMA from ground up.

Nvidia Tensor Memory Accelerator is “bolted-on”.

CUDA software has to support TMA and legacy memory models.

With respect to the non-Nvidia competition, Tenstorrent is also better.

Google TPU has large cores that make utilization problematic.

Exotic “kernel-less” startups like Groq, Cerebras, and SambaNova place enormous burden on ahead-of-time compilation.

Cores have kilobytes of memory.

Schedule stuff at compile time or get severe underutilization.

I find Davor’s arguments compelling.

(Reminder that NVDA 0.00%↑ is my largest position at an average price of $16/share.)

Architecture design philosophy may be “correct”, but the existing CUDA moat does not care. Nvidia has way more resources and can easily support all the legacy stuff, various hacks, and win even if the architecture is sub-optimal. I know people who work in the Nvidia software performance optimization teams. They are very smart and there are way more of them than Tenstorrent has employees in total.

More on this in section [5].

The other disagreement I have is Davor lumping Groq, Cerebras, and SambaNova into a single category. These three architectures are wildly different.

Groq is 144-wide VLIW and has to compile everything ahead of time in a cycle-accurate manner. It is retarded.

I genuinely believe Groq is a fraud. There is no way their private inference cloud has positive gross margins.

Cerebras is a wafer full of tiny cores. Massive scheduling issues but not in the same way as Groq. If Davor just compared Cerebras with Tenstorrent then I would agree with all his whiteboard arguments.

Looking forward to the Cerebras IPO so I can trade the crap out of this stock.

SambaNova is a CGRA (very cool super-FPGA) that is in its own category. Compiler is more like FPGA logic synthesis.

[4] Tenstorrent Opinion and Valuation Framework

I went into the meeting thinking Tenstorrent is just another Nvidia-roadkill company doomed to death.

Left the meeting genuinely hopeful.

Every question I had got a good, well-explained, credible answer/counterargument. The only remaining technical concern I have is latency as that is a difficult problem.

What impressed me is that every single Tenstorrent person I talked to was smart and (more importantly) reasonable. It is very common for smart engineers to become delusionally optimistic, blinded by hubris.

This is not Tenstorrent. They are reasonable, realistic, and rational. If any AI hardware startup can break through the Nvidia and semi-custom (Google TPU, Amazin Trainium, Microsoft Maia, Meta <whatever the fuck it’s called>) silicon moats, it’s them.

Tenstorrent is the open-source champion of AI hardware. Everything about their developer strategy is correct. Multiple open software tools. Excellent (A+) community and developer engagement. Easy access to purchasing sample hardware.

Many technical/engineering problems need to be solved but this team can get it done. Very excited to see how next-gen Grendel plays out.

Now for the valuation question.

Tenstorrent recently closed a series D venture capital raise at a $2B valuation.

Earlier this year, I heard chatter that Tenstorrent was pivoting to selling RISC-V IP. This was a sign of weakness and desperation… until ARM decided to engage in moronic behavior.

Let’s take a look at the ARM stock chart.

Many (basically all) of my finance-land friends think the ARM 0.00%↑ valuation is nuts. Yes, there is low float and a crazy multiple but… it’s been trading for quite a while.

Given that ARM is aggressively raising license prices and royalty rates and has (metaphorically) nuked Qualcomm, RISC-V CPU IP clearly has a bright future.

I think it is reasonable to say Tenstorrent is worth 1% of ARM’s market cap ($1.6B) just from the IP business.

There are no other high-performance RISC-V IP providers. All other options are for low-end stuff. Tenstorrent has Jim Keller (CPU design living legend) and many other great CPU micro architects.

The only other competition (SiFive) decided to commit seppuku. Fired the entire high-performance team.

Tenstorrent could completely fail at AI and the company is still worth at least 1% of ARM.O market cap!

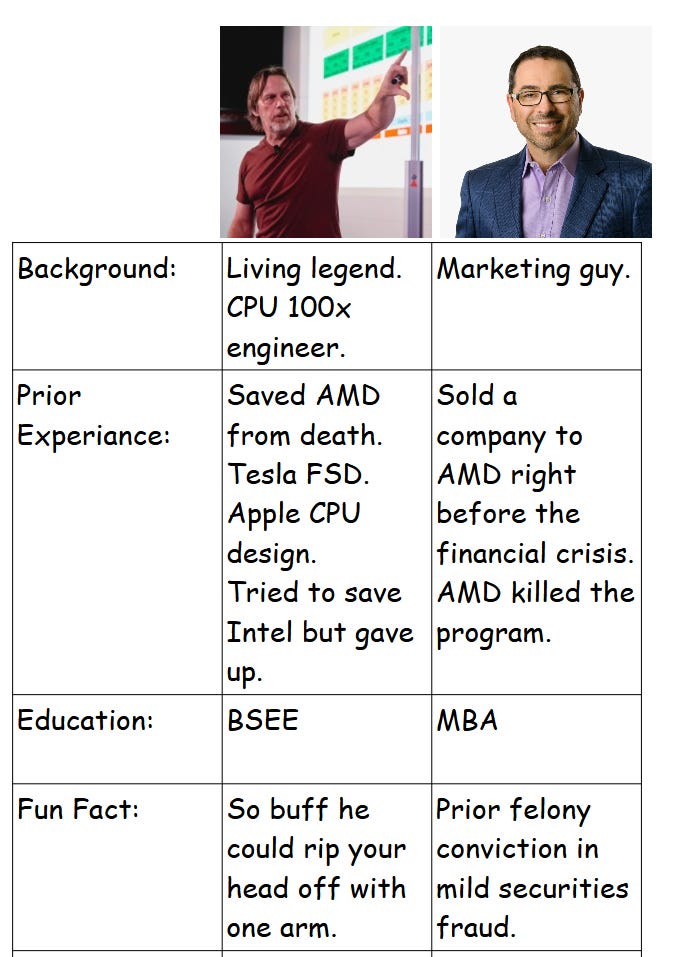

The other valuation method I have is against Cerebras who is attempting to IPO and unload equity on un-informed retail investors.

First, let’s compare the CEOs.

Now let’s compare the companies themselves.

I think Tenstorrent should be worth more than Cerebras. Either Tenstorrent is comically undervalued or Cerebras is comically overvalued.

[5] Broader AI Hardware Startup Framework

AI Hardware startups have a problem. A customer problem.

Who is going to buy horizontally supplied AI chips from a startup?

There are many options:

Nvidia

Buy latest generation.

Rent latest generation from Azure, AWS, or GCP

Rent old generation GPUs at comically cheap prices from a neocloud that is about to go bankrupt.

AMD (they keep lowering prices)

Build your own semi-custom chip.

Amazon Trainium, Google TPU, Microsoft Maia, Meta ???

Use Broadcom, Marvell, Alchip, MediaTek, or GUC as a design partner.

Rent semi-custom chips (TPU, Trainium) from GCP or AWS.

Who are all these AI hardware startups going to sell to?

Mega-corps are making their own chips in partnership with five design companies for semi-custom solutions.

AMD makes a meh product, but it is cheap and has lots of HBM.

Smaller companies can easily rent old Nvidia gear from a wide variety of shitcos (sorry “Neoclouds”) at rock bottom prices. H100 hourly rental prices have already collapsed, and Blackwell has not even ramped yet!

Horizontally supplied training hardware is a dead market. Nvidia and the semi-custom hyperscaler chips crowd everyone else out.

Bluntly, I believe every AI hardware startup should give up on training and exclusively focus on inference. There is no hope. Pivot now, maybe live. Keep working on training, definitely die.

As for inference, there is hope but only if cost is insanely low or the performance is insanely high from some exotic strategy. If your chip uses HBM and is built on TSMC N3, it probably won’t be cheap enough to compete with all those depreciated H100s and heavily subsidized Trainiums.

Tenstorrent is using Samsung Foundry SF4X, a dirt-cheap process node. Samsung Ventures have also invested in them, so I assume they got a good deal on tape-out. Finally, Tenstorrent is not using HBM. If demand materializes for Tenstorrent hardware, they can achieve healthy 60% gross margins while deeply undercutting the competition on price.

If Tenstorrent executes on their next generation and spend another 18-36 months on improving the software stack, they have a credible chance of meaningful sales into the rapidly growing inference market. I believe Tenstorrent is the only investment-grade AI hardware startup.

With that said, there are two AI hardware startups that I would like to positively mention.

SambaNova has a crazy CGRA architecture that is really cool. It’s so crazy it might work.

Positron has a galaxy brain founder+CEO.

I cannot recommend enough for you to watch the incredible 15-minute AI and economics presentation by Positron AI CEO Thomas Sohmers.

There are lots of smart engineers out there. It is very rare for someone to be a top 0.1% engineer AND smart in other topics such as economics, history, game theory, and philosophy.

The investment case for Positron AI is a galaxy brain Founder in Founder mode.

(I hold no economic interest in any private company. Only invest in public markets)

![[V]ery [L]ong [I]ncoherent [W]riteup](https://substackcdn.com/image/fetch/$s_!lVhT!,w_140,h_140,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F1335e244-45bb-4add-a677-d9ab1ed74702_875x957.png)

Oh boy, I have so many thoughts ... Let me see if I can organize it sensibly.

To start out, Jim Keller is one reason not to count out Tenstorrent too soon, at least for technical reasons (business/market issues are different). As you said he saved AMD. Not once but twice IMO. K8 and AMD64 (x86-64) could have almost killed Intel in the early 2000s but for the resurrection of P6/Pentium M arch as Core 2.

As for the Tenstorrent architecture, I think there are similarities with the Intel Larrabee approach which had many simple x86 P54C type cores and 512 bit vector units. Although it's possible the baby RISC-V core is a simple MMU less in-order single pipeline CPU core which would certainly take less area than an original Pentium dual pipelined in-order core with the x86 decode overhead. Even if the area is a low 1%, that doesn't quite tell you the runtime implications. The whole point of a GPU was to eschew the whole complex control structure of CPUs, take advantage of memory streaming on very fast HBM type memory by gathering data there and just computing as fast as possible for data parallel workloads.

If a Larrabee like approach didn't work for graphics (and even HPC applications later) then will Tenstorrent work now? Either Larrabee was ahead of its time or there are some fundamental issues. Surely during the graphics wars some might have tried such an approach or even AMD and nVidia might have thought of it?

A GPU can be thought of as a throughput compute device. A crude analogy is that a GPU is like a big Amtrak train. You gather lots of folks onto a station with flexible point to point cars/vans (CPUs) then transport them en masse on dedicated tracks (SM cores/HBM) and then scatter them back at the destination station. To torture the analogy further does it become better if light vans hookup together in a train like formation or if each train car has a small engine? I don't know... I suspect a lot will boil detailed analysis of the software framework and how much the Tenstorrent architecture can allow it to keep up with the yearly cadence of nVidia hardware releases and flops increase per year.

Full Disclosure: I may or may not have positions in AI hardware startups (no easy way to know) due to a semi-index like VC investing approach via Vested Inc.

I believe TT has a strong chance of surviving the upcoming AI hardware downturn, but personally, I'm more excited about its architecture and its potential for advancing spatial computing (not the Apple VR version...). In my opinion, a true breakthrough will come from distributed computing that mirrors the brain, with long-distance asynchronous messaging and local compute + storage serving as the nuclei of intelligence. TT is particularly well-positioned for this, unlike other architectures