Cerebras is a unique AI-hardware startup.

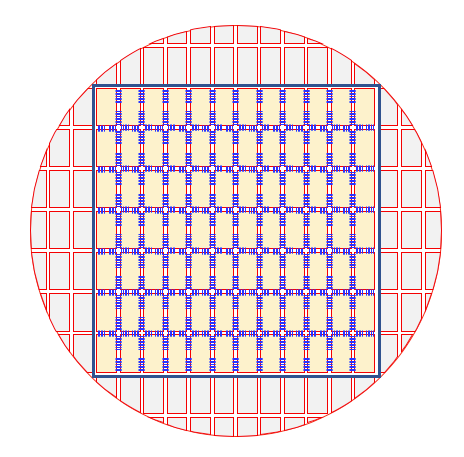

Their product is a chip the size of an entire wafer. Crazy specs. Incredible work was needed to manufacture this marvel of engineering, cool it (very difficult), and deliver power as well.

The Cerebras “Wafer Scale Engine” (WSE) engine is scale-up on steroids.

Normally, there is a limit to how large a chip can be, the “recital limit”, and it is approximately 820 mm^2. There is always a little dead space added between each chip called the “scribe line”. This ensures that the wafer can be chopped up without damaging the individual chips.

Cerebras (in partnership with TSMC) has developed proprietary technology that allows them to somehow print wires across scribe lines. They have several dozen patents on this. A truly unique capability.

I believe Cerebras is by far the #1 AI startup, in terms of potential. Unfortunately, the gods of scale-up have a huge scaling problem. They cannot scale-out.

IMPORTANT:

Irrational Analysis is heavily invested in the semiconductor industry.

Please check the ‘about’ page for a list of active positions.

Positions will change over time and are regularly updated.

Opinions are authors own and do not represent past, present, and/or future employers.

All content published on this newsletter is based on public information and independent research conducted since 2011.

This newsletter is not financial advice and readers should always do their own research before investing in any security.

Commercial Status:

The Cerebras mission is to disrupt Nvidia in AI hardware, particularly large language models (LLMs). It is not going well.

They do have a bunch of customers for non-AI workloads because of how uniquely suitable the architecture is for certain niche supercomputing tasks.

Drug discovery. // Protin folding.

Oil and gas exploration.

Exotic scientific simulations and research.

Nuclear/Physics/Defense simulations.

Cerebras sells 1-2 of their systems to each customer at around $2-4M ASP. A nice revenue stream but nothing compared to the piles of money Nvidia is printing each quarter with generative AI // LLM demand.

Multi-WSE Supercomputers:

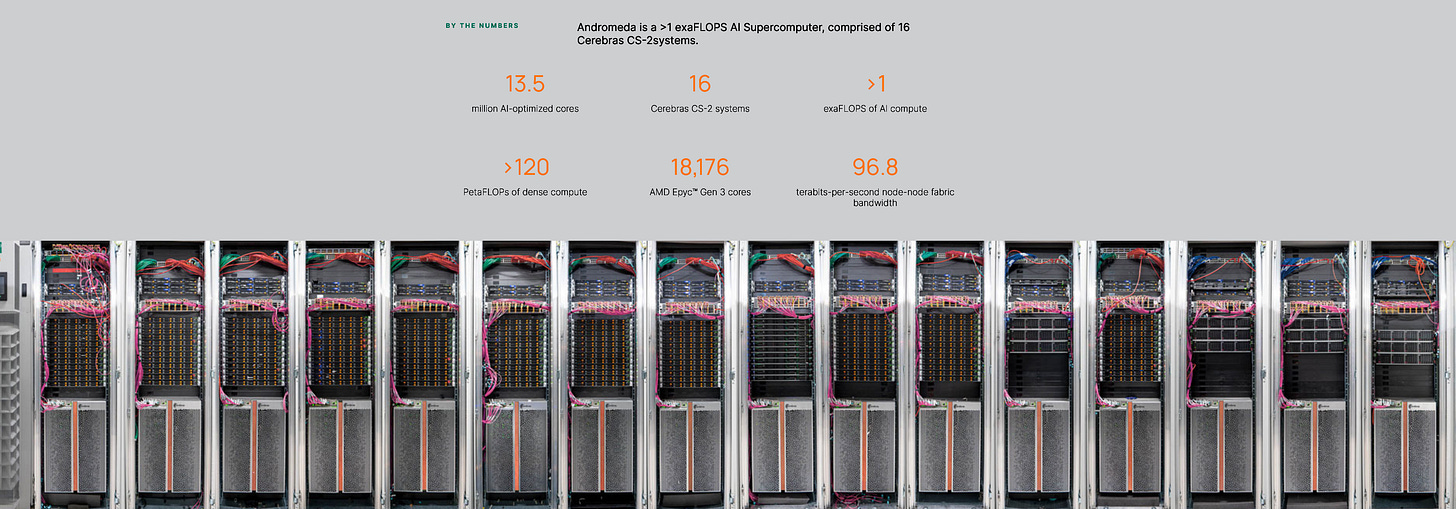

The largest system Cerebras has ever built is composed of 64x WSEs. Each of these takes up ~15U of rack space and generates 20-25Kw of heat. The incredible heat density is why they can only put one WSE per rack.

At Hot Chips 33, Cerebras gave an excellent presentation that tried to hide the copper wall they have hit.

This “near-linear” scaling claim “up to 192 CS-2/WSE” is bovine excrement. Physically building a supercomputer larger than 64 WSEs is impossible because they are held back by 100GbE copper chains. (thermal density is also a huge problem)

The rest of this post is divided up into three parts:

Reverse engineering their topology using Hot Chips 33 slides and publicly available images from www.cerebras.net

Copper Chains (why they are stuck)

Unsolicited Advice

[1] Reverse Engineering

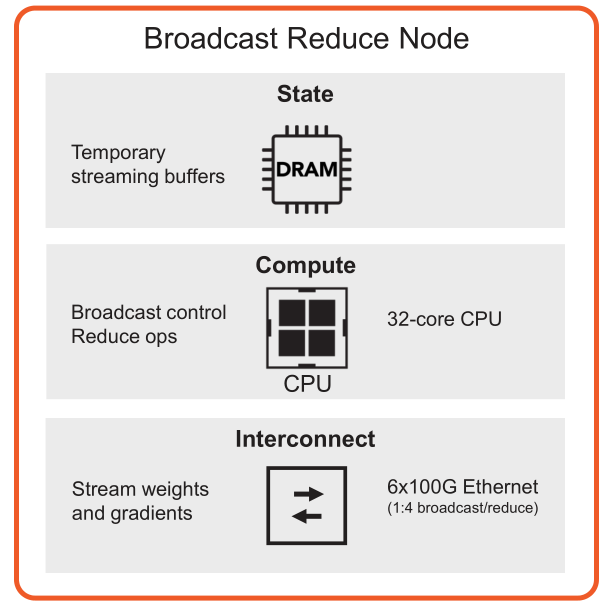

This is my best guess as to what their 16x topology looks like. All of the connections between nodes are 100GbE copper Ethernet. For the local (in-rack) connections, they probably used passive cables to save power and money. For cross-rack, they had to use active cables aka re-timers.

Here are my color codes on top of their official diagram from Hot Chips 33.

[2] Copper Chains

The second-generation WSE (CS-2) has been out for over two years now. Next-gen (CS-3) is probably under bringup right now. It almost certainly has upgraded everything, especially connectivity.

Each CS-2 has a total of 12x 100GbE connectivity. For their sake, I sincerely hope CS-3 upgraded to 12x 800GbE rather than 12x 400GbE. Nvidia is making huge leaps with their NVLink switches. An 8x gen-on-gen connectivity gain is frankly needed. Otherwise, CS-3 will get crushed by B100 in H1 2025.

Regardless of the link speed of CS-3 (400/800 GbE), there is a massive problem that Cerebras must solve.

400/800GbE active copper cables are limited to around ~3 meters.

Fiber-optic connectivity is effectively required for Cerebras to scale past 16x CS-3. The existing 64x CS-2 limit is due to this very reason.

Additionally, large clusters of Cerebras systems have severe deployment limitations due to thermal density. Most datacenters are simply not equipped to move so much heat in such a small physical footprint. Fiber-optic Ethernet would allow the WSEs to be spread out without effecting latency or performance.

[3] Unsolicited Advice

Cerebras is using regular AMD x86 CPUs for their fabric data processing. This will frankly not be good enough for next gen. They really need to offload fabric compute to something more efficient such as:

Data Processing Unit [DPU]

AMD Pensando

Nvidia Bluefield (lol)

Intel Mount Evans

FPGA (AMD/Xilinx Alveo)

Custom ASIC (Cerebras made?)

Jumping from active copper to fiber/optic connectivity is going to add a huge amount of power to the overall system. Cerebras must save power somewhere, and there is a lot to save by migrating from CPUs to something more specialized.

Writing three semi-custom Linux drivers for off-the-shelf, outdated 100GbE NICs is not good enough to compete with Nvidia. Next-gen MemoryX+SwarmX needs big upgrades.

As an engineer who is a huge fan of Cerebras, I am concerned that Nvidia is going to destroy them. Cerebras is behind. They have not shared any meaningful info on next-gen and slow-walked the details for first gen MemoryX+SwarmX. Writing a few semi-custom Linux drivers and cobbling together outdated, commodity, off-the-shelf parts will not cut it.