Semianalysis InferenceMAX Launch: Surprising Data

A somewhat irrational take.

Irrational Analysis is heavily invested in the semiconductor industry.

Please check the ‘about’ page for a list of active positions.

Positions will change over time and are regularly updated.

Opinions are authors own and do not represent past, present, and/or future employers.

All content published on this newsletter is based on public information and independent research conducted since 2011.

This newsletter is not financial advice, and readers should always do their own research before investing in any security.

Feel free to contact me via email at: irrational_analysis@proton.me

Semianalysis just launched the best benchmark I have ever seen. Tied with old AnandTech CPU performance reviews and analysis.

https://inferencemax.semianalysis.com/

They have a post on the methodology.

Let’s play a game.

I have not read the post on the methodology. Going straight to the data blind assuming SA knows what they are doing and did not make any obvious mistakes.

(a reasonable assumption)

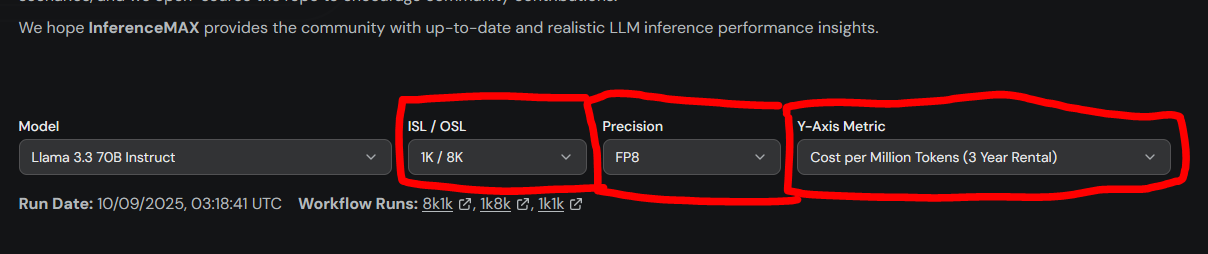

While there are several options, I only care about one configuration.

Reasoning (the most important workload) has high output-sequence length. Picking the highest ratio of OSL/ISL they have. If they had a 20:1 ratio run I would have picked that.

FP4 heavily favors Nvidia. Picking FP8 whenever possible.

Only care about cost from a rental perspective as this is the worst case scenario on pricing.

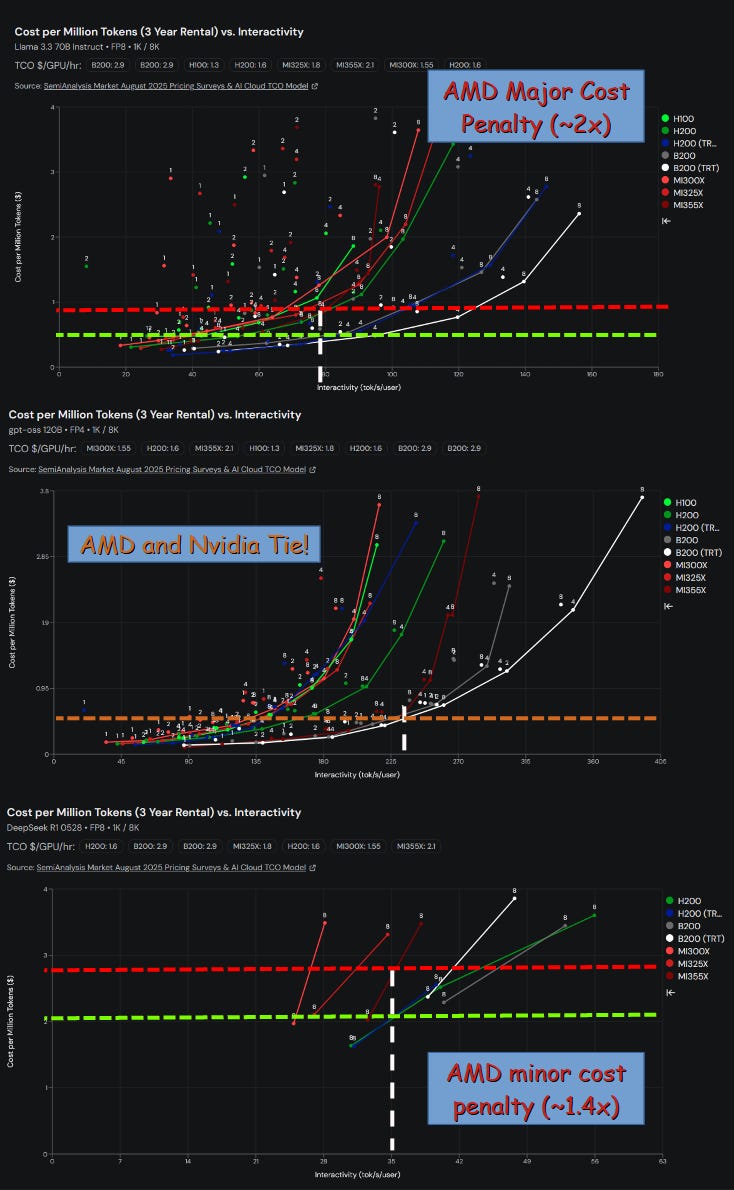

Here are the three models under this configuration down-selection:

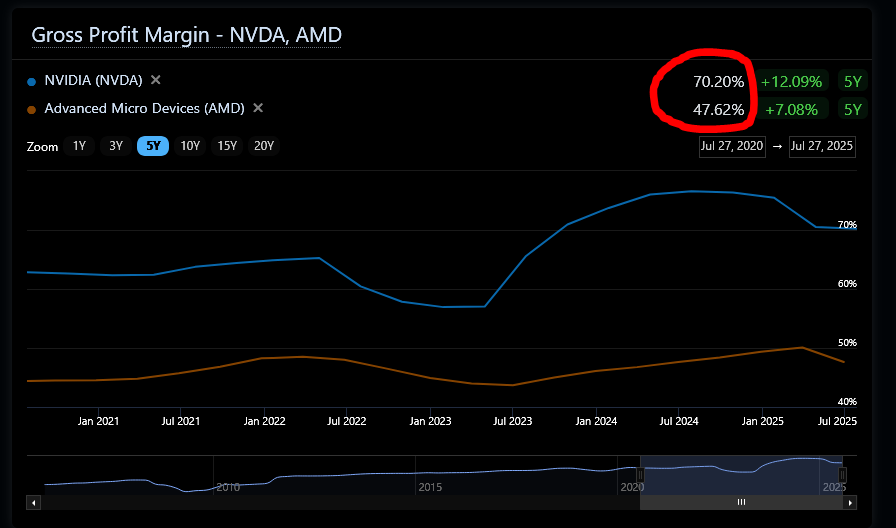

AMD has done surprisingly well, despite serious technological disadvantages. Let’s take a look at the latest corporate gross margins of AMD and Nvidia.

22% gap is quite large.

If AMD was to give away 10% of their own stock as a rebate, that would probably push down gross margins another 10-15% and take AMD from 1 win, 2 loss up to 3 win.

Intuitively, this is exactly what the OpenAI deal does.

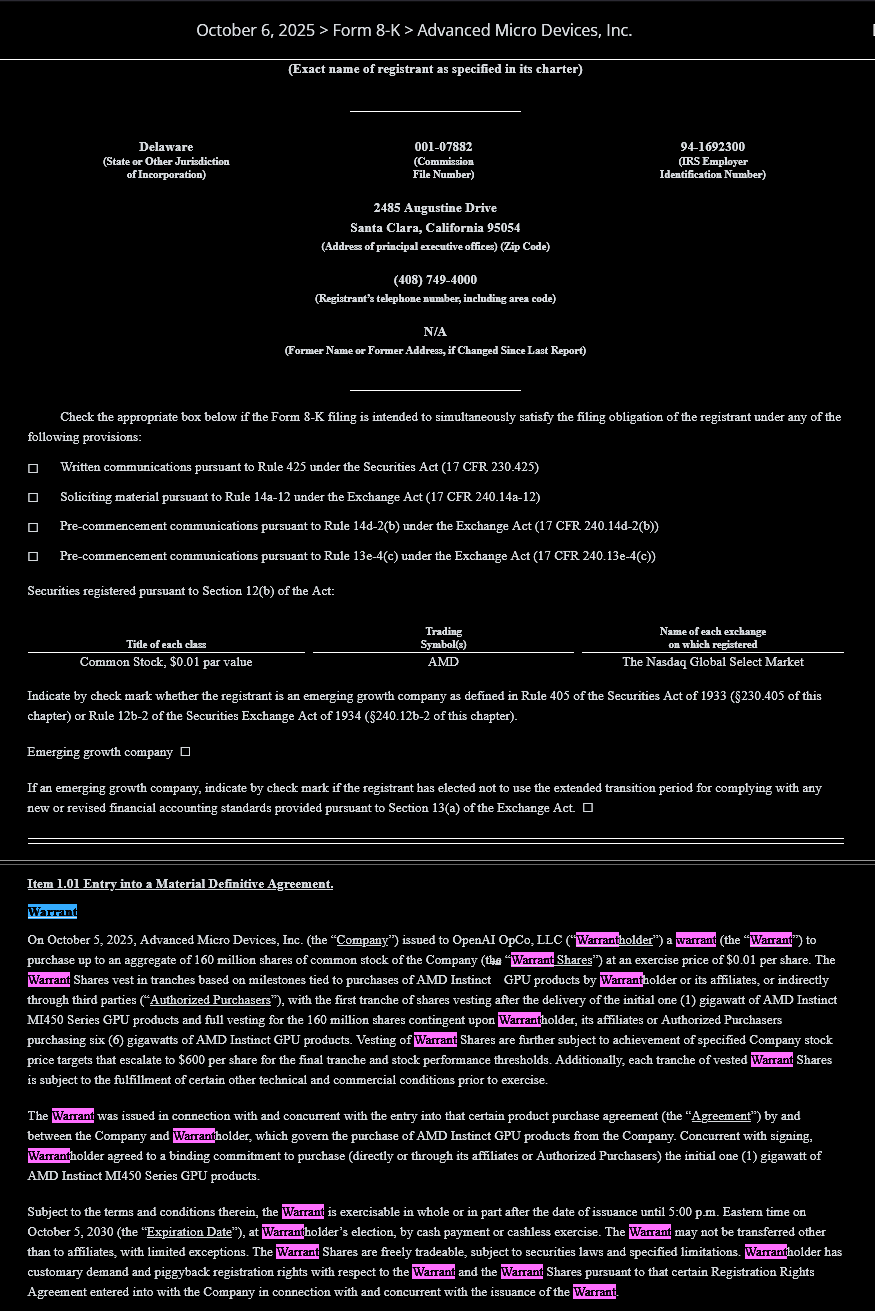

Let’s take a look at official AMD SEC filing.

https://ir.amd.com/financial-information/sec-filings/content/0001193125-25-230895/d28189d8k.htm

Warrant is basically a fancy call option.

If certain conditions are met, OpenAI gets AMD shares.

Amazon has been the most prolific user of this tactic. Astera Labs, Marvell, Fabrinet, AAOI, …

But Amazon’s shenanigans are nothing compared to what Sue Bae and Sama have cooked up.

The reason I hate these warrant deals is it messes with my investment process.

I care deeply about gross margins. It’s the first financial number I check.

These kinds of warrant deals are inherently distortive to gross margins.

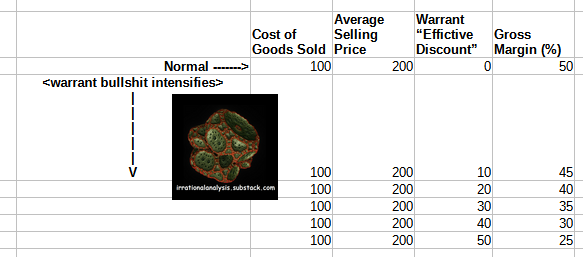

Here is a toy example.

Due to how these warrant deals are structured, they effectively act as a floating discount to products.

If I sell you thing for $200 but hand you the right to buy 1 share of stock at $0.01 even though the trading price is $20.01/share at the time of you buying thing, that is a $20 effective discount.

WARRANT DEALS ARE SNEAKY DISCOUNTS

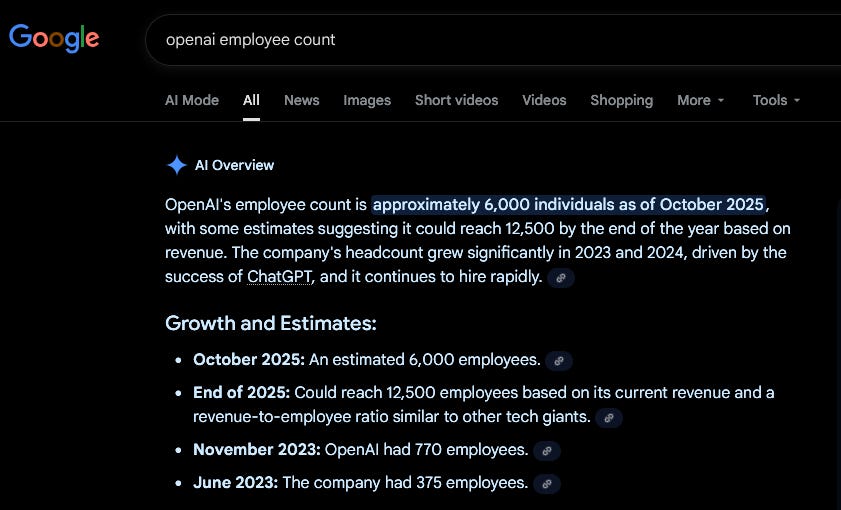

Semianalysis is 40 people and they have three engineers working on this InferenceMax project. Dylan publicly said these numbers several times.

OpenAI has over 6000 employees.

Given how important inference economics are to OpenAI as a business, it is reasonable to assume that OpenAI has somewhere between 30-300 full-time employees doing this same testing and analysis.

This is a very reasonable conclusion.

OpenAI knows their internal closed-source model workloads much better.

OpenAI knows their own tokenomics cost structure.

OPENAI KNOWS PRECISLY WHAT DISCOUNT (VIA WARRANT) IS NEEDED TO MAKE AMD HARDWARE ECONOMICALLY VIABLE.

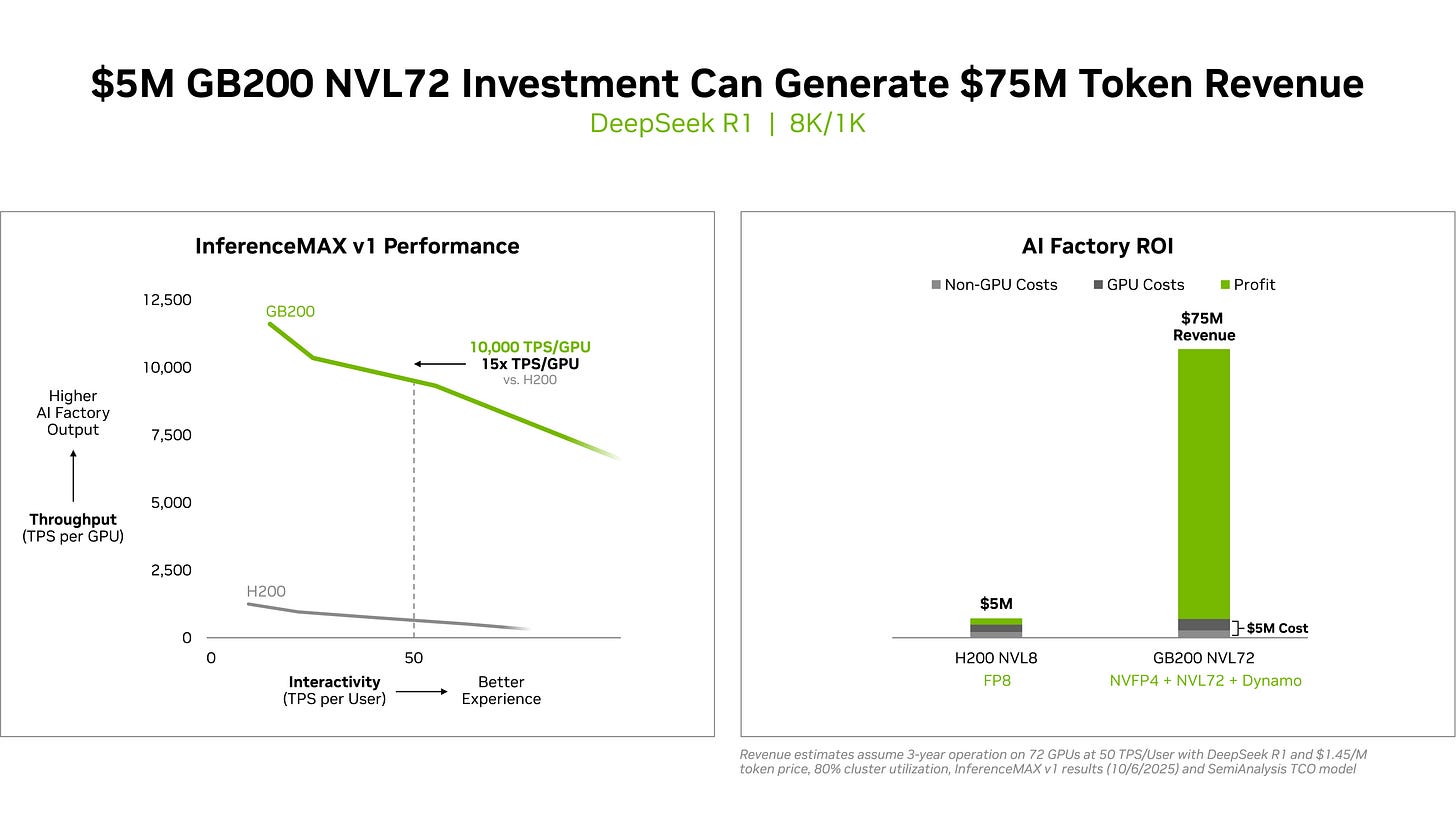

Nvidia has a blog post based on the Semianalysis InferenceMax data today.

https://blogs.nvidia.com/blog/blackwell-inferencemax-benchmark-results/

The message is clear:

Our product is crazy profitable.

This is true. Great for Nvidia.

Now… I would like to remind everyone here that at the time of writing:

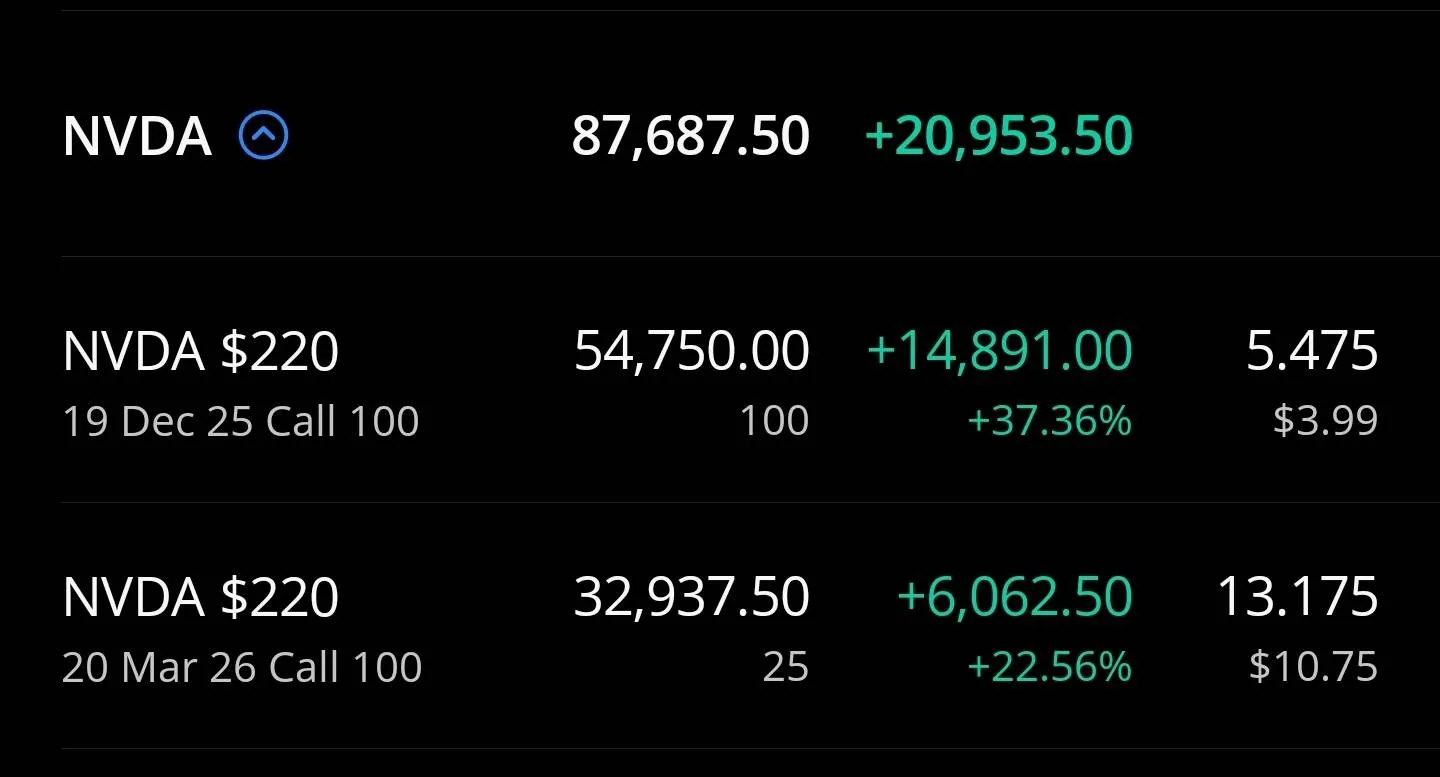

I own ~$240K worth of Nvidia shares at an average price of $13.91/share.

I own ~$87K worth of Nvidia call options at various strikes, expiration, and cost basis.

I have zero economic exposure to AMD.

InferenceMax Data has Clear and Obvious Conclusions:

Nvidia

Comically undervalued.

AI inference is crazy profitable on GB200 NVL72.

Demand is going thru the roof.

Nvidia has the most profitable hardware.

Nvidia systems have the most capabilities for the largest and best models.

NVL72 engineering benefits directly translate into massive profitability enablement.

AMD

OpenAI warrant deal is genius.

AMD actually going to hit $600/share **eventually** given the last tranche of warrants appear to vest upon stock hitting that strike price.

I have massive financial incentive to tell you AMD sucks and yet I am unironically saying InferenceMAX is positive **both** Nvidia and AMD but for different reasons.

Sometimes, investing can be simple.

My read on the inference report is that AMD inference chips surprisingly do not suck. Competitive until you go against GB. Far behind if it was tested against GB300 most likely. Imo, the biggest factor for inference in power consumption per token. Going to 2 nm is important for that.

This is one of the sharpest takes on the AMD-OpenAI warrant deal I've seen. Your framing of warrants as 'sneaky discounts' that distort gross margins is brilliant - it makes the whole structure much clearer. The logic that OpenAI with 30-300 FTEs working on inference economics knows exactly what discount is needed to make AMD viable is devastating and elegant. I also appreciate your intellectual honesty here - you have massive financial skin in the NVDA game and still acknowledge the AMD warrant deal is genius. The observation that AMD will hit $600/share as that's where the last tranche vests is a clever catch. My only quesiton is whether the warrant dilution over time will be material enough to offset the revenue growth from actually winning these hyperscale deployments.