[GB200 NVL72] The Mainframe of Doom

ARM's incredible opportunity, at Intel's expense...

IMPORTANT:

Irrational Analysis is heavily invested in the semiconductor industry.

Please check the ‘about’ page for a list of active positions.

Positions will change over time and are regularly updated.

Opinions are authors own and do not represent past, present, and/or future employers.

All content published on this newsletter is based on public information and independent research conducted since 2011.

This newsletter is not financial advice and readers should always do their own research before investing in any security.

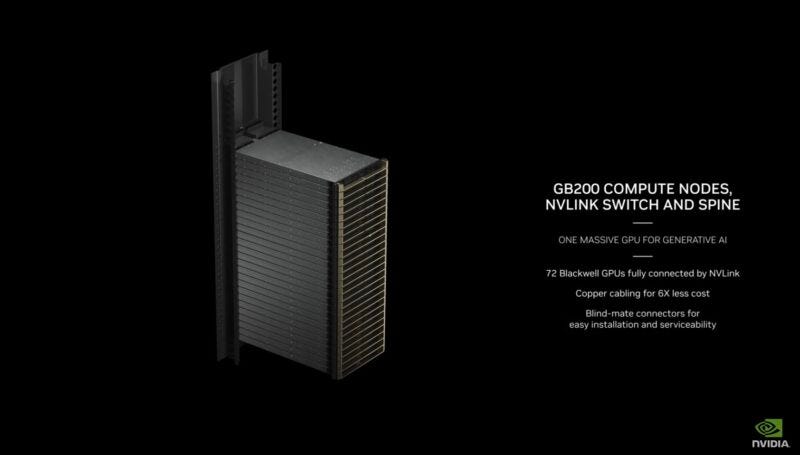

This is the Nvidia GB200 NVL72. A modern re-incarnation of the classical IBM mainframe. An existential threat to multiple hypserscaler internal projects, AI hardware startups, and merchant silicon competitors.

I will have a much more detailed technical deep-dive on this magnificent box later. Plenty of time as GB200 NVL72 won’t be shipping until Q4 2024-ish.

For now, there is a more pressing matter… on the CPU front.

Historically, Nvidia has used dual-socket Intel CPU servers to drive their 8-GPU baseboards. There is one generation (DGX A100) that uses AMD CPUs because Intel was continuously failing to launch a PCIe 4 compliant Xeon. Nvidia hates AMD. Hilarious that Intel failed so hard, Nvidia had no choice but to source CPUs from AMD.

A 4:1 ratio of GPU to CPU has been standard. Nvidia tried to push Grace-Hopper (1:1 ratio) and failed. TCO did not make sense. Almost nobody bought it.

However, the new GB200 NVL72 has a 2:1 ratio and is much more compelling from a power/thermal/system/networking integration perspective. This time is different.

GH200 was ~2% of overall volume… optimistically. If this JPM intel is even remotely correct at ~30%, then we have a 15x gen-on-gen volume increase.

A titanic shift in datacenter CPU market share.

An incredible opportunity for ARM (LTD).

A terrifying prospect for Intel.

Contents:

What is a mainframe?

Grace, ARM (RTL) V2, and TCO

Why Nvidia is bringing mainframes back.

Jensen’s Benevolent Trade Offer

The Great Value Migration

[1] What is a mainframe?

Mainframes are a category of computer that had peak popularity in the 1970’s, typically exhibiting the following attributes:

Strong focus on reliability, uptime, and stability.

Vertical integration at rack level.

Sold to high-end commercial customers at high gross-margins.

Long (decades) service life.

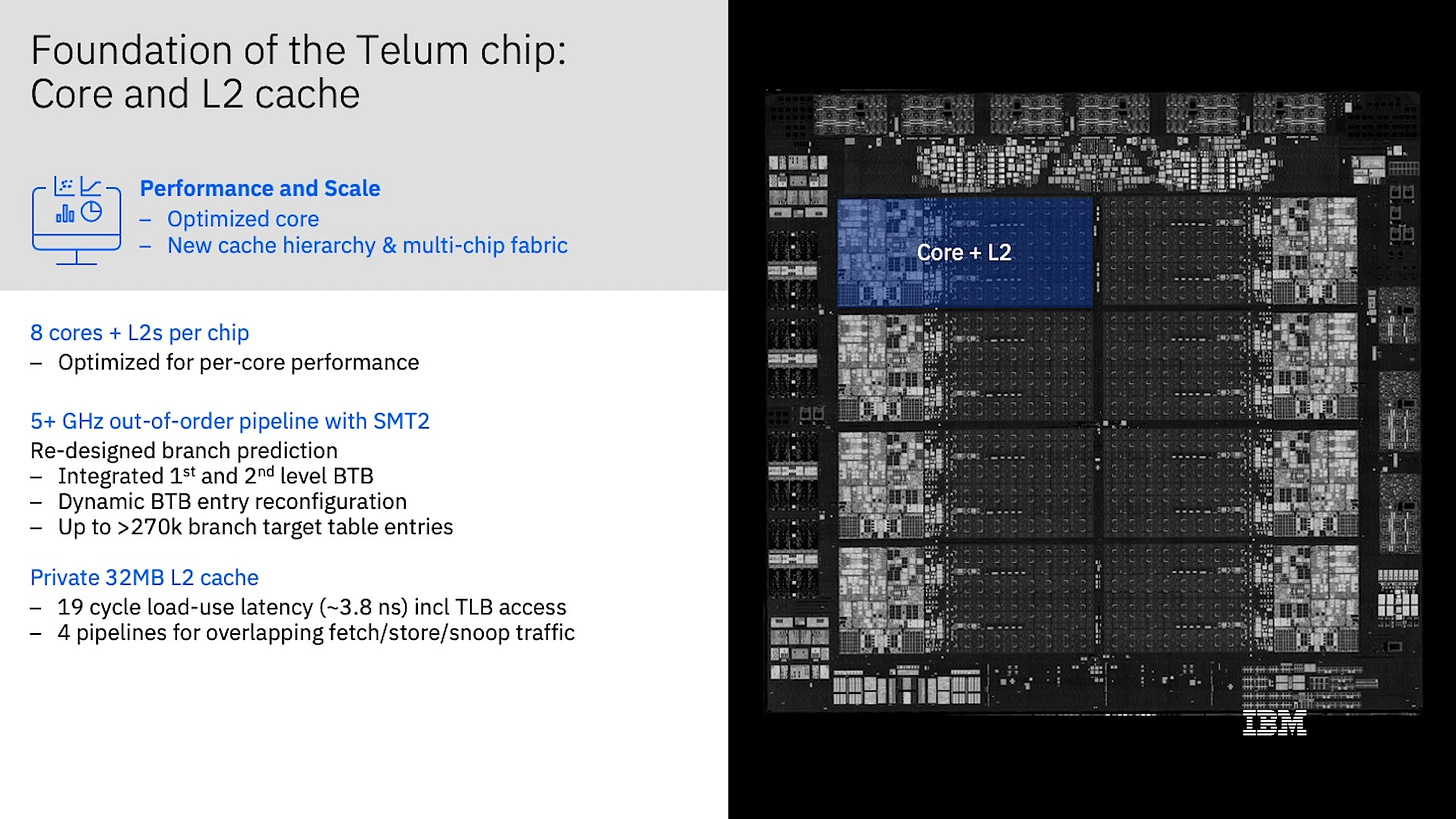

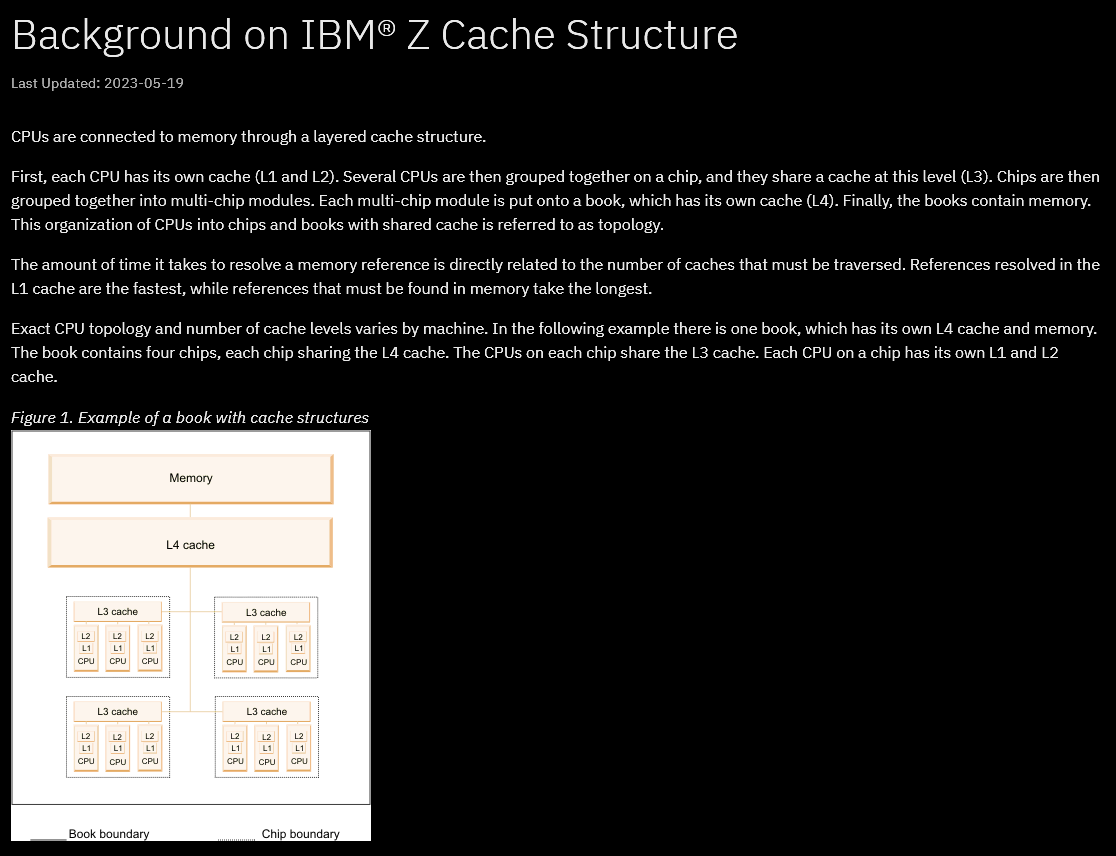

Due to high costs, low (nonexistent) modularity, and concentrated sourcing, mainframes have slowly fallen out of favor over the decades. IBM still sells mainframes under the Telum/Z brands. Very interesting architecture which will be a topic for another time.

For now, all you need to know is mainframes are vertically-integrated, exotic, ultra-expensive, rack-scale computers. Primarily used for applications that demand 99.9999999% (yes that is advertised) uptime. For example, credit card transaction processing.

[2] Grace, ARM (RTL) V2, and TCO

To understand why GB200 NVL72 changes the customer calculus on Nvidia Grace, we must first understand what Grace is.

Grace is a mediocre datacenter CPU created for one purpose: feed the GPUs. This is not an HPC part like Nvidia marketing claims.

Nvidia Grace uses the ARM (RTL) V2 CPU core microarchitecture. It is a “B-tier” uarch, decisively losing to Intel and AMD’s competing offerings.

What makes Nvidia Grace special is the interconnect fabric they have created. Getting mesh topologies right is difficult. As an aside, ARM (RTL) also sells their own interconnect fabric which Nvidia does not use because it’s bad. Latency problems and limited scaling options.

Grace is not meant to be a good CPU. It is meant to be a “good enough” CPU that can accomplish the following:

Run the CUDA software scheduler.

Feed the GPUs.

Expand memory and host additional high-speed I/O.

These goals were accomplished and yet, the Grace-Hopper Superchip completely flopped in terms of sales/volume. This is because Nvidia charges 75-85% gross margins and Grace adds significantly to CapEx while only making a minor dent in power/OpEx.

The math is very simple to model using die-size, public gross margin data, and TDP. 1:1 CPU:GPU ratio with Grace-Hopper is decisively more expensive compared to the standard 4:1 ratio with Intel/AMD-Hopper. Grace did not deliver enough value to justify Nvidia-level gross margins… until GB200 NVL72.

[3] Why Nvidia is bringing mainframes back.

GB200 packages two Blackwell GPUs per one Grace CPU. However, Blackwell is two recital-limit logic dies linked together into a single NUMA node (one GPU). Thus, Nvidia has gone from a 1:1 ratio in Grace-Hopper to effectively 4:1 with Grace-Blackwell.

This alone brings the new platform with near TCO-parity compared to the x86 version that uses Intel Emerald Rapids.

Nvidia has expanded their proprietary NVLink (Ethernet-style serdes) to 200G first… over passive copper!

Anyone who tries to duplicate this level of connectivity and coherency with non-NVLink is going to eat massive power/TCO penalties from all the active copper and/or optical cables needed. Even then, the performance of GB200 NVL72 probably wins.

This box is a TCO monster. Massive performance gains. Massive power/thermal load reduction. A tightly integrated, liquid cooled mainframe that saves ~30% power.

The AI mainframe/appliance is here.

I believe the JPM supply-chain check is directionally correct. 10-20X share gain of the Grace-bundled SKUs is not just plausible. It is almost guaranteed because of Jensen’s recent galaxy-brain strategy.

[4] Jensen’s Benevolent Trade Offer

Remember that the reason mainframes fell out of favor was high cost and single sourcing of hardware. People did not want to be beholden to IBM and chose to prop up an ecosystem of interoperable parts which allowed multi-sourcing. This is why PCIe, Ethernet, standardized memory interfaces, and so on exist.

GB200 NVL72 is an existential threat. All of the large cloud hyperscalers have strong political/strategic motivations to avoid buying this box. Many customers are actively trying to ditch InfiniBand for Ethernet as it is.

One way to make sure this glorious AI mainframe sells well is to make the performance very good via excellent engineering and tight vertical integration. Nvidia has accomplished this with 200G SerDes over passive copper and crazy good liquid cooling at to all the chips at a rack-level.

But sometimes, technical merit is not enough. A political angle is also needed. This is where Jensen’s benevolent trade offer comes in.

Nvidia has been propping up small cloud providers such as Coreweave with direct investment and favorable allocation. If the big cloud hyperscalers refuse to buy GB200 NVL72, then Nvidia will simply sell these superior systems to Coreweave and friends.

Folks interested in AI don’t care where the GPU hours come from. AI customers dont care about HPC CPU performance. Grace feeding lots of memory-coherent Blackwell GPUs are perfect.

Buy the AI mainframe and make Nvidia’s moat deeper or lose share to startups that can YOLO raise capital off of GPU financing and favorable allocation.

All the internal cloud hyperscaler chip projects (excluding Google TPU) are on a timer now. Build a system (not chip!) that can fight Nvidia head-on or die. Renting out highly subsidized, low-quality chips (COUGH COUGH Trainium/Inferentia COUGH COUGH) wont work for much longer.

[5] The Great Value Migration

At the time of writing (late March, 2024), I feel that the market does not fully grasp how important GB200 NVL72 is for ARM and Intel.

After reviewing some sell-side models, I think I am right. ARM datacenter/infrastructure revenue should be growing much higher than what is modeled by sell-side. Probably a step function in CY Q4 2024 // FY Q3 2025 instead of weak, linear Q-on-Q growth.

Sell-side seems to be modeling flat revenue for Intel’s datacenter division. There is no way in hell those numbers are correct given the severe headwinds.

AMD will continue to gain share with Turin.

Nvidia will devourer share with Grace.

GPU CapEx spend will continue to obliterate CPU spend.

The economic value of Intel CPUs is about to get devoured by Nvidia and ARM (LTD). Nvidia is happy to take some minor dilution to dig the moat deeper and usher in an era of AI mainframes. ARM (LTD) will be delighted to grow in datacenter/AI with much higher royalties % on much higher ASP chips as the mobile market enters terminal stagnation.

The role of Grace is worth watching, indeed. How is its memory going to be used? It is much cheaper than HBM per GB, so makes sense for Nvidia to load it up, but it will be interesting to see if AI gets creative using this slower but local tier. Or is it just a glorified IO buffer space, plus space for Grace to run system management code, accumulate metrics?

But for sure, there don't seem to be any "classic" CPUs or servers in the GTC rendering of the 32,000 GPU cluster. Maybe a few hidden in some of the racks.

Thanks, great article. Any idea re ARM's royalty per Grace CPU? Tae mentioned $100+ per Cobalt 100 CPU but that's a 128-core and MSFT is buying a subsystem from ARM (2x royalty rate). Assume 74-core CPU that is not a subsystem would attract a lower royalty? Appreciate Nvidia is taking V2 cores vs. N2 for MSFT. Would still think MSFT pays more on a per core basis vs. Nvidia. Any pushback?